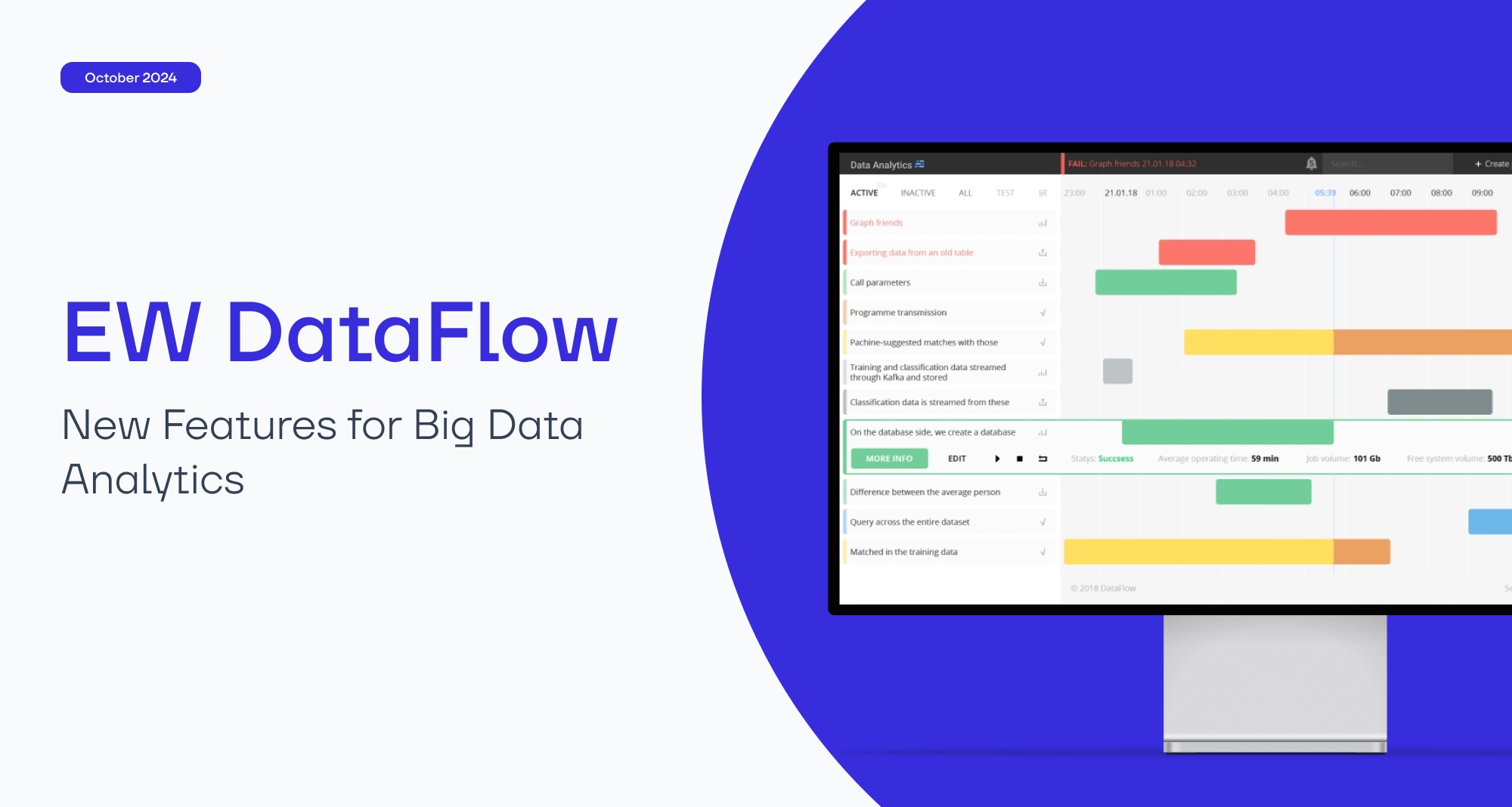

Flexible Filters and Automatic Restart of Analytical Tasks: New EW DataFlow Release

18 october 2024

Managing tasks in a Hadoop cluster just got even more efficient with the latest EW DataFlow updates. Here's a look at the new features we've added in response to customer requests and how they simplify the day-to-day work of data engineers and data scientists.

The latest EW DataFlow release features new capabilities that streamline the management of computational resources and analytical tasks. Learn more in this article.

Additionally, new filters help identify tasks with errors or execution delays. This enables data specialists to promptly address issues—for instance, by concentrating on jobs that are running longer than usual and consuming excessive resources.

Main screen filters include: task author (Created by me), type, status, execution dates, and custom tags

In such cases, platform users had several workarounds, but all relied on human intervention. For instance, after a failed import, the job had to be restarted manually. If specialists forgot to do this, data for a certain period could become unavailable or outdated.

The automatic import restart feature is enabled by default, but it can be disabled if necessary—users have full control over the process

In future updates, we plan to make logs more informative and structured. For example, execution data will be broken down by task stages: preparation, execution, and completion. This will help data specialists quickly pinpoint where errors occurred and how to resolve them.

If it seems that a task is taking longer to complete after a restart, this can be verified using the saved logs, execution times, and history

Now, task dependencies are not displayed all at once. Users can select which lines to show by clicking the "+" button to the right or left of a job block. This reveals additional connections, making the visualization more manageable and helping users quickly understand which tasks in the job chain may have led to an error.

The analysis of complex dependencies has become more convenient and intuitive, speeding up the resolution of work tasks

Previously, data scientists had to manually stop jobs entirely, often forgetting to restart them later. Then they could forget to restart the job at the right time, resulting in data inaccessibility and obsolescence.

Now, EW DataFlow users can stop the job instance by clicking the "Kill Instance". The system will automatically restart the paused instance according to a specified schedule (hourly, daily, weekly), minimizing downtime and ensuring data accessibility.

"Kill Instance" enables more flexible resource management in a Hadoop cluster

Many of these updates have been developed in response to requests and suggestions from EW DataFlow users. We value your feedback and are committed to continuously improving our platform to meet the needs of our customers, enhance process efficiency, and provide flexible task management within the Hadoop cluster.

Learn more about EW DataFlow

More News

The latest EW DataFlow release features new capabilities that streamline the management of computational resources and analytical tasks. Learn more in this article.

EW DataFlow is a big data platform that enables users to launch, configure, and monitor computational tasks (jobs) in a low-code environment within the Hadoop ecosystem.

Flexible Task Filtering on the Home Page

Task

Previously, the main screen displayed only 10–20 analytical tasks (jobs) at a time. However, in real-world scenarios, the number of tasks can reach up to hundreds, making it difficult to find the right one by scrolling through and searching by name.Solution

We have introduced filters and sorting options for jobs based on various criteria, allowing users to quickly find the needed tasks and focus on the most important ones.Additionally, new filters help identify tasks with errors or execution delays. This enables data specialists to promptly address issues—for instance, by concentrating on jobs that are running longer than usual and consuming excessive resources.

Main screen filters include: task author (Created by me), type, status, execution dates, and custom tags

Automatic Import Restart

Task

Import tasks from external sources don’t always complete successfully because they depend on external systems. For example, data engineers might schedule an import at 11 a.m., but external databases may not be ready for export until 11:30 a.m. Since the data wasn’t available at the scheduled time, the import in EW DataFlow would fail with an error.In such cases, platform users had several workarounds, but all relied on human intervention. For instance, after a failed import, the job had to be restarted manually. If specialists forgot to do this, data for a certain period could become unavailable or outdated.

Solution

To automate the process, we added an automatic job restart feature for import operations. If a task fails to complete on the first attempt, EW DataFlow will try to run it up to three more times, with a one-hour interval—e.g., at 11 a.m., 12 p.m., and 1 p.m. This increases the likelihood of successful task completion, even if the data becomes available later than planned. Automation also helps reduce the risk of human error, ensuring data remains accessible and up to date.The automatic import restart feature is enabled by default, but it can be disabled if necessary—users have full control over the process

Saving Logs and Execution History After Task Restart

Task

Previously, when an analytical task was stopped, for instance, due to configuration changes, all execution history and logs were reset. When another user opened the restarted task with an empty history, it took time to confirm that no data had been lost. Additionally, it was impossible to compare task performance before and after changes, such as whether the task executed faster or slower, or what errors had occurred previously.Solution

Now, all execution data is preserved even after a task is stopped or restarted. This allows users to easily track performance changes and execution times, identify potential issues, and optimize the code.In future updates, we plan to make logs more informative and structured. For example, execution data will be broken down by task stages: preparation, execution, and completion. This will help data specialists quickly pinpoint where errors occurred and how to resolve them.

If it seems that a task is taking longer to complete after a restart, this can be verified using the saved logs, execution times, and history

Convenient Visualization of Task Dependencies

Task

Previously, EW DataFlow users encountered difficulties when visualizing task dependencies. Connections between jobs were represented by thin lines on the graph. As the number of tasks grew, the graph became overloaded with connections, making it difficult to read and analyze jobs or identify potential issues.

Solution

Now, task dependencies are not displayed all at once. Users can select which lines to show by clicking the "+" button to the right or left of a job block. This reveals additional connections, making the visualization more manageable and helping users quickly understand which tasks in the job chain may have led to an error.

The analysis of complex dependencies has become more convenient and intuitive, speeding up the resolution of work tasks

One-Click Instance Termination for Resource Optimization

Task

When working with large datasets, it's crucial to have the flexibility to manage computational resources efficiently to free them for higher-priority tasks. Data specialists can do this by pausing a single job instance — a specific execution of a job per unit of time: an hour, a day, a week.Previously, data scientists had to manually stop jobs entirely, often forgetting to restart them later. Then they could forget to restart the job at the right time, resulting in data inaccessibility and obsolescence.

Solution

Now, EW DataFlow users can stop the job instance by clicking the "Kill Instance". The system will automatically restart the paused instance according to a specified schedule (hourly, daily, weekly), minimizing downtime and ensuring data accessibility.

"Kill Instance" enables more flexible resource management in a Hadoop cluster

Other updates

In addition to the changes listed above, we introduced other minor updates in the EW DataFlow. For example, we have improved the code editor to make it more comfortable for working with scripts. We have also added the ability to split files when exporting to external systems, which helps to flexibly manage data offloading. It's also easy to see which jobs are using preconfigured resource presets.Many of these updates have been developed in response to requests and suggestions from EW DataFlow users. We value your feedback and are committed to continuously improving our platform to meet the needs of our customers, enhance process efficiency, and provide flexible task management within the Hadoop cluster.

Learn more about EW DataFlow